SubjECTive-QA: A dataset for the subjective evaluation of answers in Earnings Call Transcripts (ECTs)

Abstract

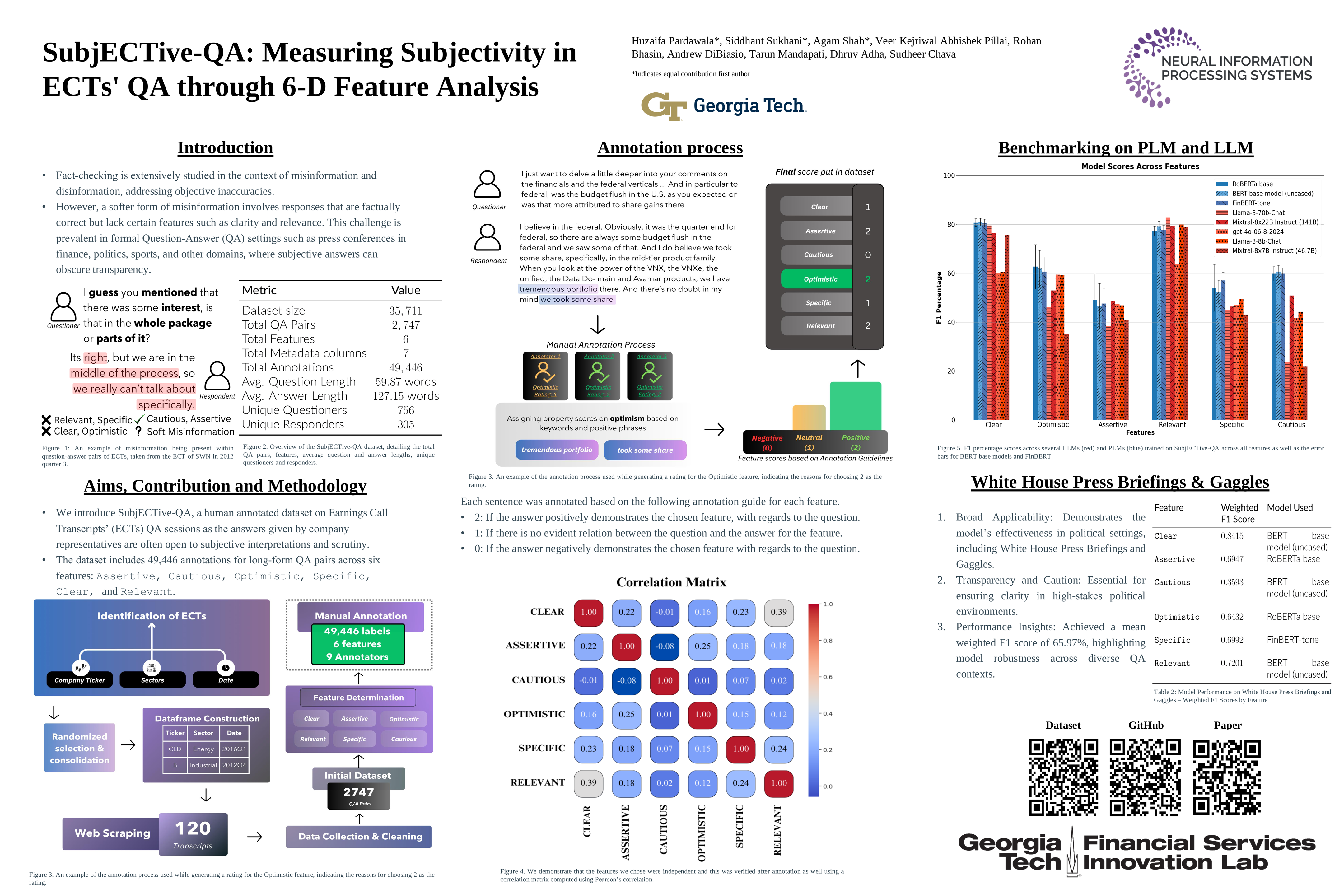

Fact-checking is extensively studied in the context of misinformation and disinformation, addressing objective inaccuracies. However, a softer form of misinformation involves responses that are factually correct but lack certain features such as clarity and relevance. This challenge is prevalent in formal Question-Answer (QA) settings such as press conferences in finance, politics, sports, and other domains, where subjective answers can obscure transparency. Despite this, there is a lack of manually annotated datasets for subjective features across multiple dimensions. To address this gap, we introduce SubjECTive-QA, a manually annotated dataset created by nine annotators on Earnings Call Transcripts (ECTs) as the companies' statements are often subjective and open to scrutiny. The dataset includes 2,747 annotated long-form QA pairs across six features: Assertive, Cautious, Optimistic, Specific, Clear, and Relevant. Benchmarking on our dataset reveals that the best-performing Pre-trained Language Model (PLM), RoBERTa-base, has similar weighted F1 scores to Llama-3-70b-Chat on features with lower subjectivity, such as Relevant and Clear, with a mean difference of 2.17% in their weighted F1 scores, but significantly better on features with higher subjectivity, such as Specific and Assertive, with a mean difference of 10.01% in their weighted F1 scores. Furthermore, testing SubjECTive-QA's generalizability using QAs from White House Press Briefings and Gaggles yields an average weighted F1 score of 65.97% using our best models for each feature, demonstrating broader applicability beyond the financial domain. SubjECTive-QA is currently made available anonymously under the CC BY 4.0 license.