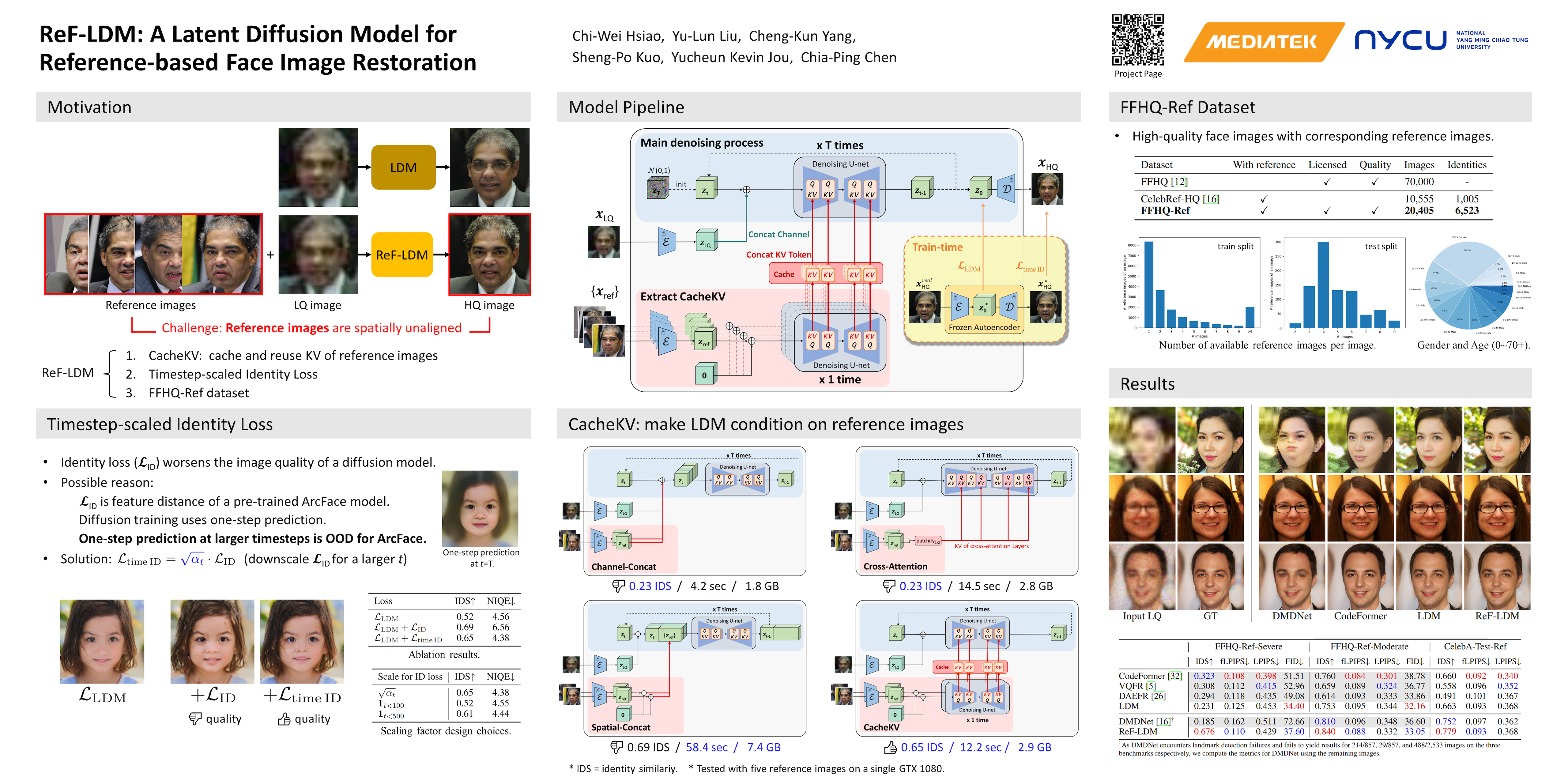

ReF-LDM: A Latent Diffusion Model for Reference-based Face Image Restoration

Abstract

While recent works on blind face image restoration have successfully produced impressive high-quality (HQ) images with abundant details from low-quality (LQ) input images, the generated content may not accurately reflect the real appearance of a person. To address this problem, incorporating well-shot personal images as additional reference inputs may be a promising strategy. Inspired by the recent success of the Latent Diffusion Model (LDM) in image generation, we propose ReF-LDM—an adaptation of LDM designed to generate HQ face images conditioned on one LQ image and multiple HQ reference images. Our LDM-based model incorporates an effective and efficient mechanism, CacheKV, for conditioning on reference images. Additionally, we design a timestep-scaled identity loss, enabling LDM to focus on learning the discriminating features of human faces. Lastly, we construct FFHQ-ref, a dataset consisting of 20,406 high-quality (HQ) face images with corresponding reference images, which can serve as both training and evaluation data for reference-based face restoration models.